Selected Publications

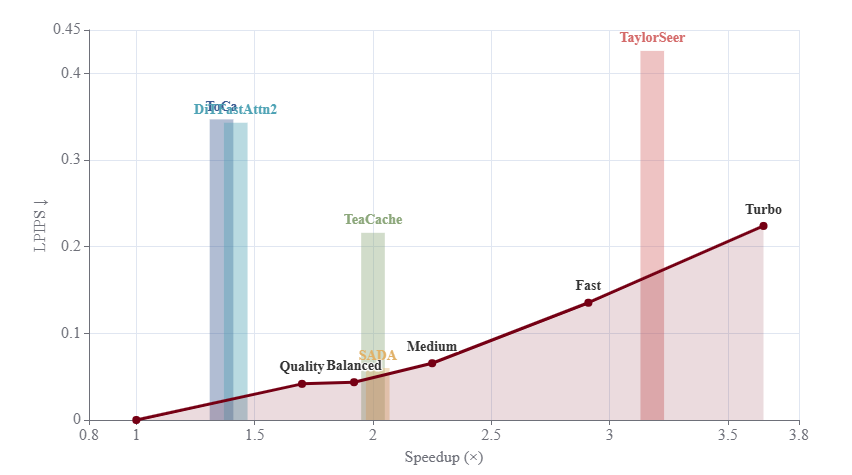

SADA: Stability-guided Adaptive Diffusion Acceleration

Ting Jiang*, Yixiao Wang*, Hancheng Ye*, Zishan Shao, Jingwei Sun, Jingyang Zhang, Zekai Chen, Jianyi Zhang, Yiran Chen, Hai Li

ICML 2025

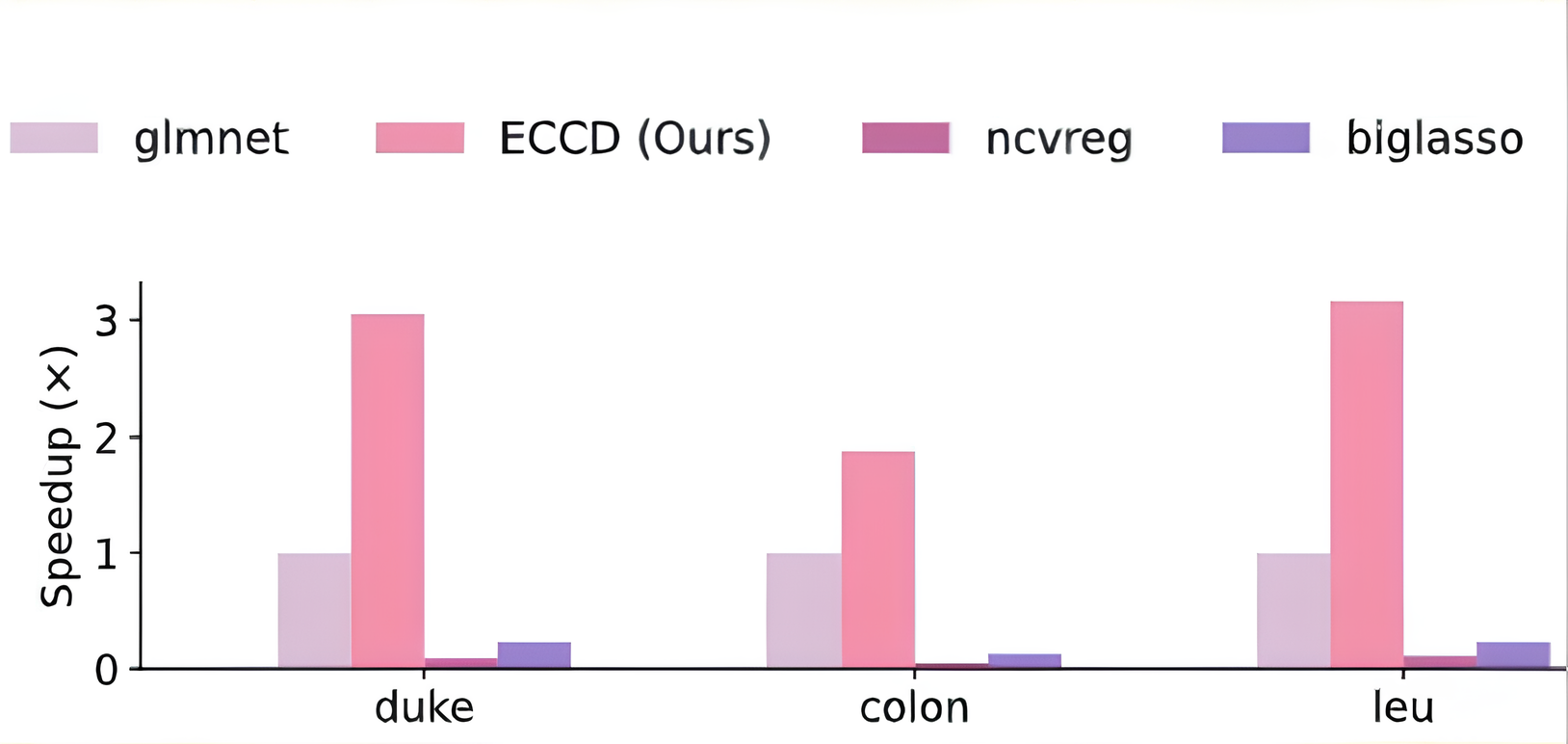

Enhanced Cyclic Coordinate Descent for Elastic Net GLMs

Yixiao Wang*, Zishan Shao*, Ting Jiang, Aditya Devarakonda

NeurIPS 2025

ZEUS: Zero-shot Efficient Unified Sparsity for Generative Models

Yixiao Wang*, Ting Jiang*, Zishan Shao*, Hancheng Ye, Jingwei Sun, Mingyuan Ma, Jianyi Zhang, Yiran Chen, Hai Li

ICLR 2026 (under review)

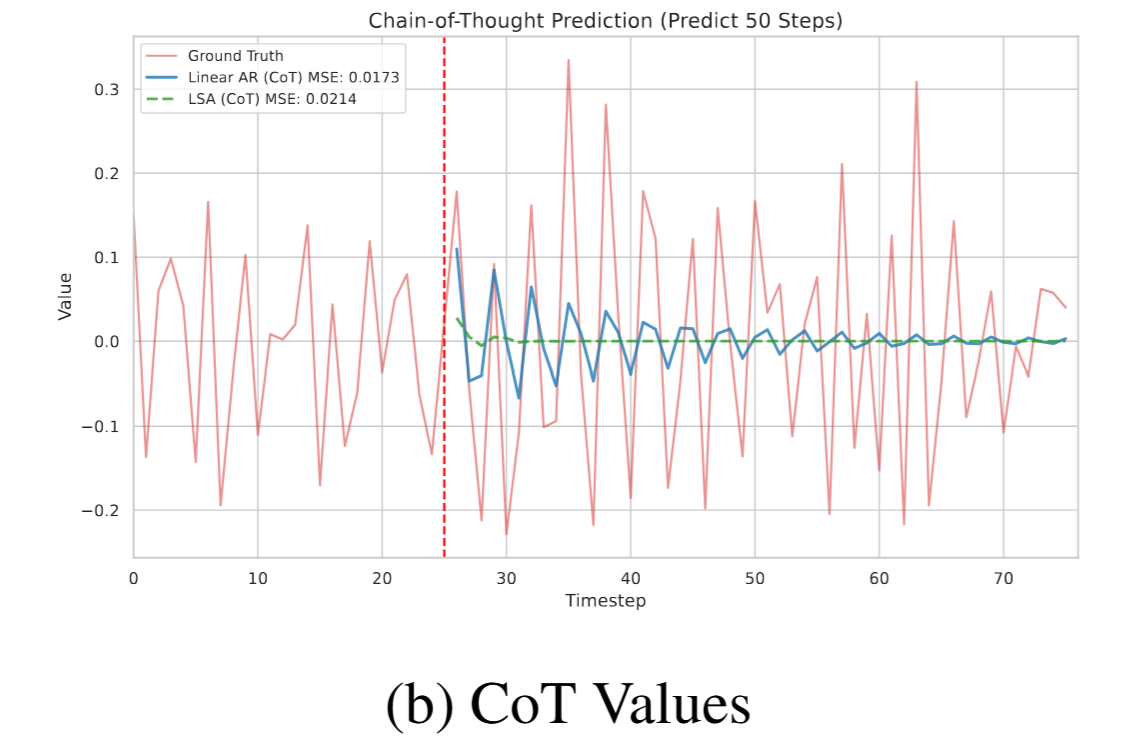

Why Do Transformers Fail to Forecast Time Series In-Context?

Yufa Zhou*, Yixiao Wang*, Surbhi Goel, Anru Zhang

NeurIPS 2025 Workshop on WCTD (oral, 3/68; acceptance rate 40%)

ICLR 2026 (under review)

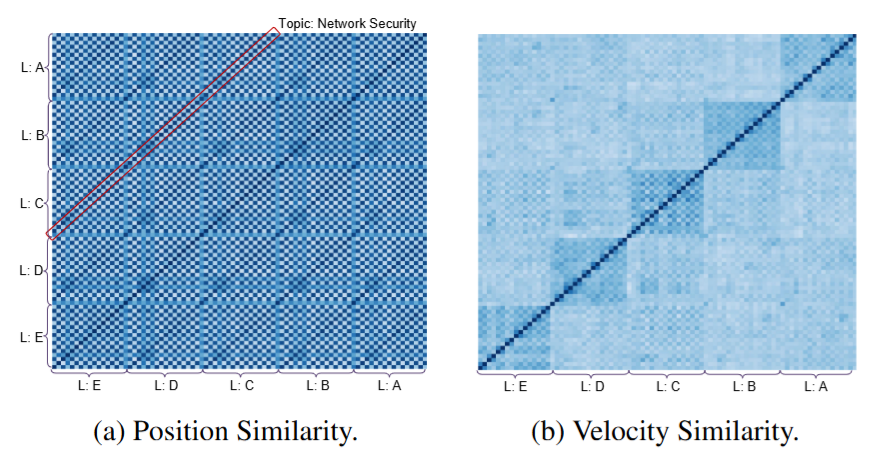

The Geometry of Reasoning: Flowing Logics In Representation Space

Yufa Zhou*, Yixiao Wang*, Xunjian Yin*, Shuyan Zhou, Anru Zhang

ICLR 2026 (under review)

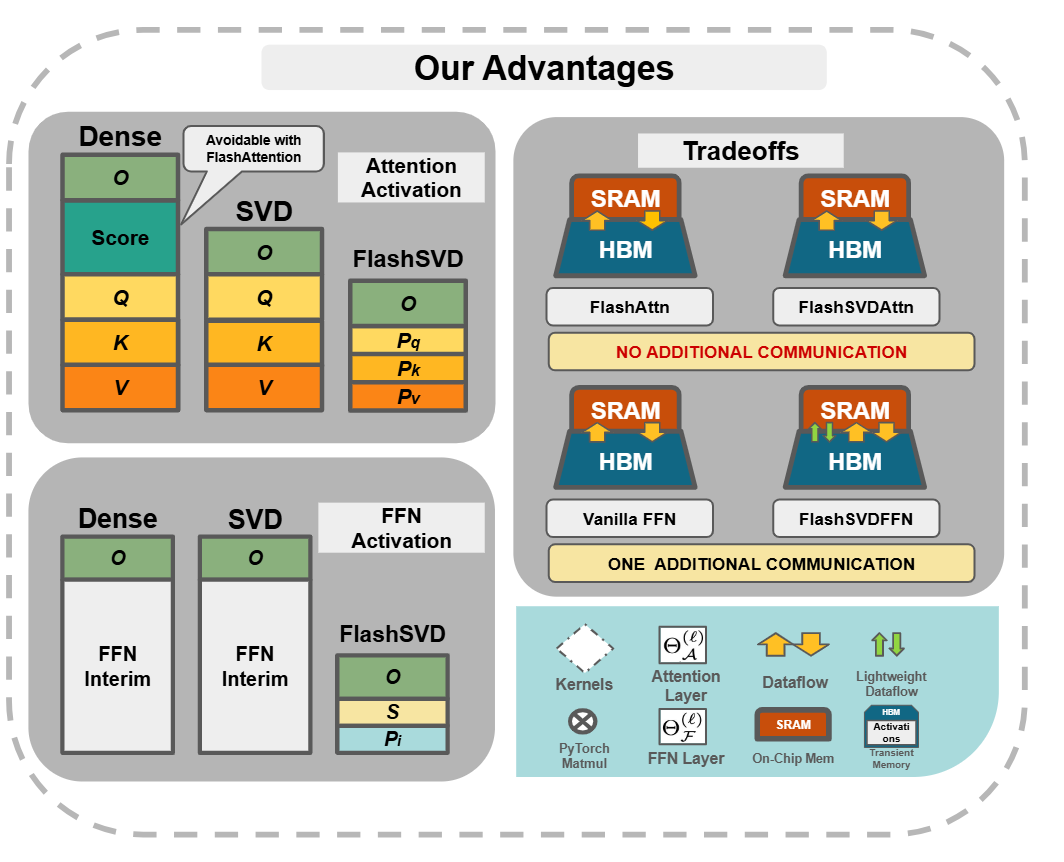

FlashSVD: Memory-Efficient Inference with Streaming for Low-Rank Models

Zishan Shao, Yixiao Wang, Qinsi Wang, Ting Jiang, Zhixu Du, Hancheng Ye, Danyang Zhuo, Yiran Chen, Hai Li

AAAI 2026

* Equal contribution